This article on ethics in video games is an enhanced write-up of the talk I gave at the Game Developers Conference (GDC) in March 2019.

Videogames are one of the most popular forms of recreation in the world and they will generate over 150 billion US dollars in 2019, yet they often generate terrible press. For example, some games are accused of making players violent, or to turning them into addicts. I’ve been in the videogame industry for over 10 years and the latest game I’ve worked on (as director of user experience at Epic Games until I left on October 2017), Fortnite, is played by about 250 million players. It’s also under scrutiny by many concerned parents who fear that the game might have some negative impact on their children. Some parents have been reporting, for example, that their children play Fortnite at the expense of their education, health, or even personal hygiene. It saddens me that videogames can have a negative impact on some people’s lives but I’m also frustrated by the fearmongering I’ve been witnessing, most of it with no solid scientific ground. This fearmongering — and sometimes even scapegoating — around videogames can distract the public and lawmakers from identifying and addressing the real potential of videogame play, and tech in general. As a result the game industry is often defensive when responding to the horrible things it’s accused of, which is understandable but fails to build a constructive dialog. We, videogame developers (i.e. all the professionals participating in crafting a game), have a responsibility as content providers to foster this dialog.

Here I offer my perspective on ethics in the game industry from a cognitive science standpoint (given my doctorate in cognitive and developmental psychology) coupled with my expertise in game user experience through my work at Ubisoft, LucasArts, Epic Games, and current experience as an independent game ux consultant. Below, I will discuss addiction (and attention economy), loot boxes, dark patterns, and violence. For each of these topics I will identify the main public concern, what science has to say about it, and then offer some suggestions on what game developers could do to address the related concerns.

Tldr: This piece is long, because all the topics are nuanced and often complex, so the tldr (too long, didn’t read) takeaway is: be careful of shortcuts claiming that videogames have an effect (whatever effect this might be). I encourage you to read the long story…

1. Videogame “addiction“

o Main public concern regarding “addiction” to games

Many parents are worried because they see their children spending a lot of time playing video games. They hear about a “gaming addiction” that is affecting some players and fear that their loved ones will become addicts and suffer in their school and work performance and in their social relationships. There is also concern that games are “designed to be addictive” and to provide “dopamine shots” to the brain. In some countries, such as South Korea and China, video gaming has been recognized as a disorder and treatment programs have been established. In fact, China has recently introduced a curfew on online gaming for minors (even though the shutdown policy in South Korea was not found to impact sleeping hours in teenagers).

o What science says about addiction

What is crucial to determine here is the following: is there a specific type of addiction related to videogames requiring a specific treatment, and can games create such an addiction? Before tackling these questions, let’s first assess what an addiction is, because simply being engaged with a game and playing for several hours per day is not enough to be considered an addiction. Addiction refers to pathological behavior that has a precise definition. There are addictions tied to the use of a substance, such as alcohol, opioids, or tobacco. In these addictions, the substance generally creates a physical dependence. For example, heroin binds to opioid receptors in the brain, creating a surge of pleasurable sensation. It is extremely addictive as it is disturbing the balance of hormonal and neuronal systems, and its impact cannot easily be reversed, creating a profound tolerance and physical dependence. Other substances don’t create such a dramatic physical dependence, or can create a dependence that is mainly psychological, which of course does not mean that their effects are less concerning.

Other addictions are said to be “behavioral,” which means that they are not substance-related, such as addiction to sex, shopping, or sports. As I’m writing these lines, only “gambling disorder” is mentioned as a behavioral addiction in the DSM-5 (Diagnostic and Statistical Manual of Mental Disorders), the manual used by mental health professionals to diagnose mental disorders. There is also mention of an “Internet gaming disorder” in the DSM-5 but as a condition requiring further research, along with caffeine use disorder. Nonetheless, the proposed symptoms to diagnose an Internet gaming disorder are the following:

- Preoccupation with gaming;

- Withdrawal symptoms when gaming is taken away or not possible (sadness, anxiety, irritability);

- Tolerance: need to spend more time gaming to satisfy the urge;

- Inability to reduce playing, unsuccessful attempts to quit gaming;

- Giving up other activities, loss of interest in previously enjoyed activities due to gaming;

- Continuing to game despite problems;

- Deceiving family members or others about the amount of time spent on gaming;

- The use of gaming to relieve negative moods, such as guilt or hopelessness;

- Risk, having jeopardized or lost a job or relationship due to gaming.

A diagnosis of Internet gaming disorder requires gaming causing “significant impairment or distress” and experiencing at least five or more of the listed symptoms within a year. It is worth noting that there is no consensus on these “Internet gaming disorder” symptoms, pulled from substance addiction, and they are in fact currently heavily debated.

In late 2017, the World Health Organization (WHO) announced that in the upcoming edition (11th Revision) of the International Classification of Diseases (ICD), gaming disorder would be identified as a new disorder. I do not debate that some people experience problematic gaming that might distress them, and that they need help. However, the larger question is whether gaming disorder is a specific type of addiction requiring a specific treatment. Some people have problematic behavior with sports, yet there is no specific sports disorder recognized by the DSM-5 or the ICD. Some researchers estimated that only 0.3 to 1% of the general population might qualify for a potential diagnosis of Internet disorder (Przybylski et al., 2016), while it is estimated that there are over two billion gamers across the world. Among those who play videogames, it was found that more than 2 out of 3 players did not report any symptoms of Internet gaming disorder. Many researchers claim that problematic gaming should be best viewed as a coping mechanism associated with underlying problems such as depression and anxiety that arise from neuro-chemistry independent of game play. While it is important to recognize and help people suffering from problematic gaming, the concern according to those researchers is that focusing on a “gaming disorder” would create a moral panic, and would stigmatize billions of gamers. The risk would then be creating a mental “illness” that didn’t previously exist, as neuroscientist Mark Lewis puts it. This is why the creation of the first gaming detox camps are raising concerns for many: the strong focus on videogames could distract from the real origins of the symptoms, such as child negligence or anxiety, while many of these videogame rehab programs are extremely expensive. Of course, other researchers and health professionals find that having now a recognized “gaming disorder” will raise awareness on the suffering of those addicted to games and will encourage more research to ultimately help them more adequately.

Lastly, some scientists suggest that videogames are designed to be addictive and compare some game mechanics to machine gambling, which they say could activate the brain’s so-called “reward system” in the same way as drugs like cocaine or heroin do. I will leave aside the behavioral psychology aspect of gambling (conditioning and variable rewards) for now, as I will tackle these notions in the next section. I will stay focused on explaining here why some people are concerned that videogames might give “addictive dopamine shots” to players. The term “brain-reward circuitry” (deemed a gross oversimplification by some neuroscientists who deplore the increase of “neuroquackery”) is used to describe what is going on in our brains when we learn, for example, that an action can lead to a “reward”. A reward is anything that we perceive as being a good outcome, from enjoying a tasty meal to receiving a love message from someone we care about. When we want such a reward, dopamine is slowly released in the brain to motivate us, which is overall useful since we have survived so far by seeking good outcomes and avoiding pain. However, according to neuroscientist Mark Humphries, suggesting that dopamine is released when we receive a reward is not exact. He explains that dopamine is released fast when we didn’t expect to receive a reward, yet get one. Mark explains that dopamine “signals the error between what you predicted and what you got. That error can be positive, negative, or zero. It is not reward. Dopamine neurons do not fire when you get something good. They fire when you get something unexpected. And they sulk when you don’t get something you expected.”

So it’s not a surprise, nor it is a brain malfunction, to have dopamine released when we play a fun videogame that makes you want to reach goals in a challenging environment that can also make you experience setbacks, or unexpected good outcomes. Because if it wasn’t the case, it would mean that playing games was a boring activity. Dopamine is present in our brains all day long as we learn about our environment, are motivated in doing something, receive messages from friends, do our daily meditation, play games, or even move around. Parkinson’s disease is due to a low level of dopamine in the brain, for example. So the new Silicon Valley “dopamine fasting” trend does not make any sense. You do not want to get lower dopamine levels in your brain. What is true though, is that certain drugs, like methamphetamines, give a massive surge of dopamine in the brain, which is why they are so addictive. It’s not having dopamine released in the brain that can be a problem, it’s the amount released. A study by Koepp et al. published in Nature in 1998 showed that playing video games increased the levels of dopamine in the brain by about 100%. Which can seem a lot, but so does sex. Meditation was also found to increase dopamine levels by 65%. As a comparison, an illicit drug like methamphetamine increases dopamine levels by over 1000%. As Markey and Ferguson (2017) point out, if videogames that are enjoyed across the world by over 2 billion people were as addictive as illicit drugs, we would have a massive epidemic to address.

In sum, pathologic gaming does exist and some people do need help. However, the scientific debate around the existence of a specific addiction to videogames, and its causes and treatments is far from resolution. This sensitive topic requires further research, especially given the attention it gets from policy makers. In the meantime, distinguishing between excessive gaming, problematic gaming, and addictive gaming (Kuss et al. 2017) can help avoiding stigmas, downplaying true addiction suffering, and overall dispeling the moral panic.

o What could the game industry do regarding problematic gaming?

First and foremost, game studios could collaborate with researchers and mental health professionals to understand problematic gaming better and ideally find ways to identify and help struggling gamers (all the while respecting privacy laws). Especially the few game studios blessed with a hugely successful game (since most game creators struggle to make a living), and even more so if said game is massively played by children. It’s completely understandable for parents to be concerned when their child plays videogames for long hours every day. It’s particularly important in this case to help distinguish between passionate engagement with a game and pathologic gaming.

Beyond the problem of pathological overuse of games, we need to look into certain mechanics the game industry (and overall the tech industry) often uses. Ethicist and ex-Googler Tristan Harris has been raising awareness (albeit powdered with some non-scientifically-based fearmongering) on how technology exploits “our psychological vulnerabilities.” Mechanics like bottomless scrolling (such as on Facebook or Twitter), autoplay (such as on Youtube or Netflix), or push notifications (used by all social media and many mobile games) could encourage us to engage or stay engaged with a platform even if we didn’t have a clear intention to do so initially. This is what we call the “attention economy:” all platforms are competing to grab and keep people’s attention and some questionable techniques are sometimes used to this end. Moreover, some techniques are downright unethical and labeled as dark patterns, which I address later. A compelling example of such engagement-sustaining technique described by Harris is the snapstreak feature on Snapchat. This feature shows the number of days in a row that two Snapchat users have communicated with each other. Snapchat users are then encouraged to keep the streak growing as breaking it will ultimately be seen as a punishment. Tweens and teenagers are particularly vulnerable to this feature, because connecting with friends is very important, no one wants to be the jerk that breaks a 150-day streak, and because the brain is immature until adulthood. One area of the brain keeps developing until about 25 years of age: the prefrontal cortex. This part of the brain, among other things, controls impulses and automatic behaviors. Thus, those persuasive techniques that aim to keep people engaged with a platform are somewhat more difficult for children and teenagers to resist, and the younger they are, the harder it is for them. The Marshmallow Test is a good example illustrating how difficult it can be for some children to resist the temptation of eating candy and to delay gratification, which is why they need their parents and educators to set limits for them (note: the Marshmallow study was recently put into question regarding the links it claimed between early delay of gratification and later outcomes; I’m only referring here to the fact that children can have difficulty delaying gratification). This is not to say that adults can efficiently resist these persuasive mechanics; human irrationality and susceptibility to bias is very well documented. The Persuasive Tech Lab at Stanford, for example, studies computers as persuasive technology (aka “captology”), including design and ethics of interactive products. If we can maybe debate whether adults should have full responsibility over their decision-making, I would argue that it’s clearly unethical to deliberately use persuasive techniques on children, who are less equipped than adults to resist them efficiently, when the intention behind is business-oriented. In many cases, game developers do not intentionally try to exploit children’s immaturity, yet when such concern is uncovered, the issue must be addressed. Even if it’s not unhealthy for children to spend reasonable time playing videogames (and play is very important overall to keep our brains sharp), the brain typically needs a variety of activities to develop in the best conditions. Children should therefore be encouraged to take part in various activities instead of being encouraged to only play one type of game for too long.

Game developers need to have a better understanding of the psychological impact of certain mechanics they use — sometimes simply because they saw it used in another game they played or because some telemetry data revealed they resulted in better retention or conversion — especially if their games are not rated M for Mature or AO for Adults Only in the ESRB rating system (or 18+ in the European PEGI rating system). Yet it would be a better user experience (UX) practice for developers to rethink for all audiences how some games constantly reward engagement or even punish disengagement. Some games punish players when they do not play every day, for example by making them miss out on a highly desirable reward because they didn’t log in enough consecutive days, or if they didn’t gather enough currency by playing every day to earn a reward that will expire by the end of a season. We could encourage a healthier gaming behavior by rewarding disengagement, such as the rest system in World of Warcraft allowing players who took a break to gain additional experience points when they resume playing. At the very least we should avoid punishing disengagement in all our games, and think of ways to genuinely encourage breaks in games played by children (even if the game wasn’t initially targeted to children). By “genuinely encouraging breaks” I mean designing games to reward breaks like in WoW, not merely displaying a message telling kids they should consider taking a break since such messaging is largely ineffective.

Lastly, game studios and game journalists should stop abusing the term “addiction”. Many games are described as being “addictive” as if this was a good thing, to express that the game is fun and engaging. Engagement is critical to the success and enjoyment of a game; if we’re not engaged it means that we are not motivated to play a certain game, which likely means that the game is boring or has too many barriers to its enjoyment (aka “user experience” issues, or UX issues). However, good UX has nothing to do with “dark patterns” (designs that have a purposely deceptive functionality that are not in users’ best interest) which I will describe below. And, sure, addiction doesn’t occur with something that is not perceived as pleasurable. But addiction is a pathology; there is nothing fun about it. Some people need help to get out of an addiction, let’s not downplay their suffering by using this term lightly.

2. Loot boxes and gambling

o Main public concern regarding loot boxes

There is a general concern that loot boxes are a form of gambling. Parents fear that they could “condition” their children to playing and spending more, and that it could participate in an increase of problem gambling among children and teenagers.

o What science says about loot boxes

We first need to establish the difference between the legal definition of gambling and the psychological mechanism behind it. The legal definition of gambling in the United States is registering a bet in exchange for something of value (such as buying a lottery ticket) or playing a game of chance in the hope of getting anything of value (such as money). Per this definition, loot boxes (or loot crates, or gasha) that can be redeemed with an in-game currency (such as gems, or coins) to receive a randomized selection of virtual loot (such as a rare weapon or skin), could be considered a form of gambling, although legal wrangling over this continues internationally. One might argue that the in-game currency required to buy loot boxes is not necessarily tied to real-life money (for example if players obtain said currency –or are awarded loot boxes directly– through gameplay) and that most games do not allow cashing out (gain real money from an item received). Although most loot boxes can be obtained by spending hard currencies that can in turn be bought with real money, and secondary markets allowing players to trade loot in exchange for money do exist, the important notion in the legal definition is, in both cases, “something of value”. I would argue that a rare item, if desired by players, will also be felt as valuable within the game system even if it’s virtual.

Whether or not we agree that loot boxes are gambling, the psychological mechanism behind both is nonetheless the same although the outcome is not: they are a form of intermittent rewards with a variable ratio reinforcement schedule (aka variable rewards). Before explaining what this is, let’s take a step back to explain human motivation. There is no behavior without motivation, which means that if you’re not motivated to do something, you will simply not do it. There are a lot of theories about human motivation but we currently do not have one unified theory that can explain all of our behaviors. For the sake of simplicity, let’s focus here on two main types of motivation: extrinsic and intrinsic motivations. Extrinsic motivation is when you do something in order to get something else; such as accomplishing a mission to get a loot, or take a job you don’t find particularly interesting to earn money, or work on a school assignment you’re not excited about to get a good grade. It’s all about doing a specific behavior (a task or an action) to obtain a reward that is extrinsic to the task. Whereas intrinsic motivation is when you do something for the sake of doing it, such as learning to play the guitar because you love music, or playing a videogame you enjoy.

Let’s now take a deeper dive on extrinsic motivation: accomplishing a task to get a reward. This type of motivation is possible because we implicitly learn to associate a stimulus to reinforcement, either rewarding or aversive. The learned association between a stimulus and a response is called conditioning. We distinguish between classical conditioning, which happens passively (e.g. learning to associate the sound of a fire alarm to imminent danger), and operant conditioning, which requires an action from the individual (e.g. a snack in a vending machine can lead to a pleasurable experience if we put money in the machine and press the correct button). Thus, operant conditioning is when you learn the connection between one stimulus and the probability of getting a reward if you make the appropriate action. Sometimes a long time elapses between the behavior and the reward (e.g. solving a math problem in a school exam and getting the result from the professor a few days later), sometimes the reward is immediate (e.g. solving the same math problem on an interactive platform and getting a good grade right away). The closer in time the reward from the action, the more efficiently we learn the association. This is one of the reasons why videogames can be beneficial in education: because they give immediate feedback on players’ actions. Throughout our lives, we learn enormously through conditioning. Just consider road safety: you’re conditioned to fasten your seatbelt when you hear your car beeping, you’ve learned that another type of beep (associated with a light on the dashboard) means that you are low on gas and motivates you to go to the nearest gas station to avoid a punishment (running out of gas), you’ve been conditioned to stop at a red light to avoid getting a ticket or, worse, provoking an accident, and so on. And yes, the brain releases “fast” dopamine (i.e. a big fluctuation) when we learn that an action leads to a reward, so that we can later anticipate getting this reward again: this is how we may become motivated to do certain things in life (which seems to then involve slow dopamine changes). As mentioned in the previous section, dopamine is, among many other things, the neurochemical involved in motivation and learning. So dopamine is released in your brain right before eating a square of chocolate, having sex, seeing a friend, doing your morning mediation, and as you hope to redeem something cool in a loot boxes.

Although conditioning is everywhere in humans and other animals, and help us survive by seeking pleasure and avoiding pain, it has a bad reputation and is widely misunderstood in the public today. This is mainly because of an infamous behavioral psychologist called B.F. Skinner who did a lot of research on operant conditioning in the middle of the twentieth century. The typical apparatus that Skinner used to conduct his experiments is the operant conditioning chamber, now called a “Skinner box”. An animal (usually a rat or a pigeon) would be isolated in the chamber that contained a food dispenser and a lever. After a specific stimulus occurred (such as a light or a sound), if the rat pressed the lever a food pellet would be delivered into the food dispenser. Skinner discovered that, as you can imagine, rewarding a specific behavior (giving food if the correct lever is pressed) will have a tendency to motivate the rat to increase this behavior (i.e. press this lever more often), while punishing a behavior (giving an electrical shock if the wrong lever is pressed) will have a tendency to decrease that behavior.

Conditioning is not controversial by itself, it’s just an efficient way to learn how to avoid what we consider as undesirable outcomes and how to get more of the rewards we care about. This instrumental learning certainly has a lot of merits and was largely used in the 20th century in the education, military, or work environment (and still is today). However, it has been also widely criticized because this paradigm ignores important unobservable aspects of learning (such as attention or memory) and behaviorism enthusiasts often overlooked undesirable side effects. For instance, punishments can induce stress or aggression and can ultimately be detrimental to learning (see for example Galea et al., 2015, for the impact of punishment on retention for motor learning and Vogel and Schwabe, 2016, for the impact of stress in the classroom). Not to mention that stress and anxiety can also damage someone’s health (rat or human). Skinner used physical punishments to torture the poor rats and pigeons in his lab in the name of science. Even when the punishment is not physical (say, being shamed for eating a massive steak in the context of climate emergency), it is not as efficient to learn than being rewarded for the opposite behavior (getting a token of appreciation for choosing the vegan option). Physical punishments have obvious heavy consequences on well-being and are thus clearly intolerable.

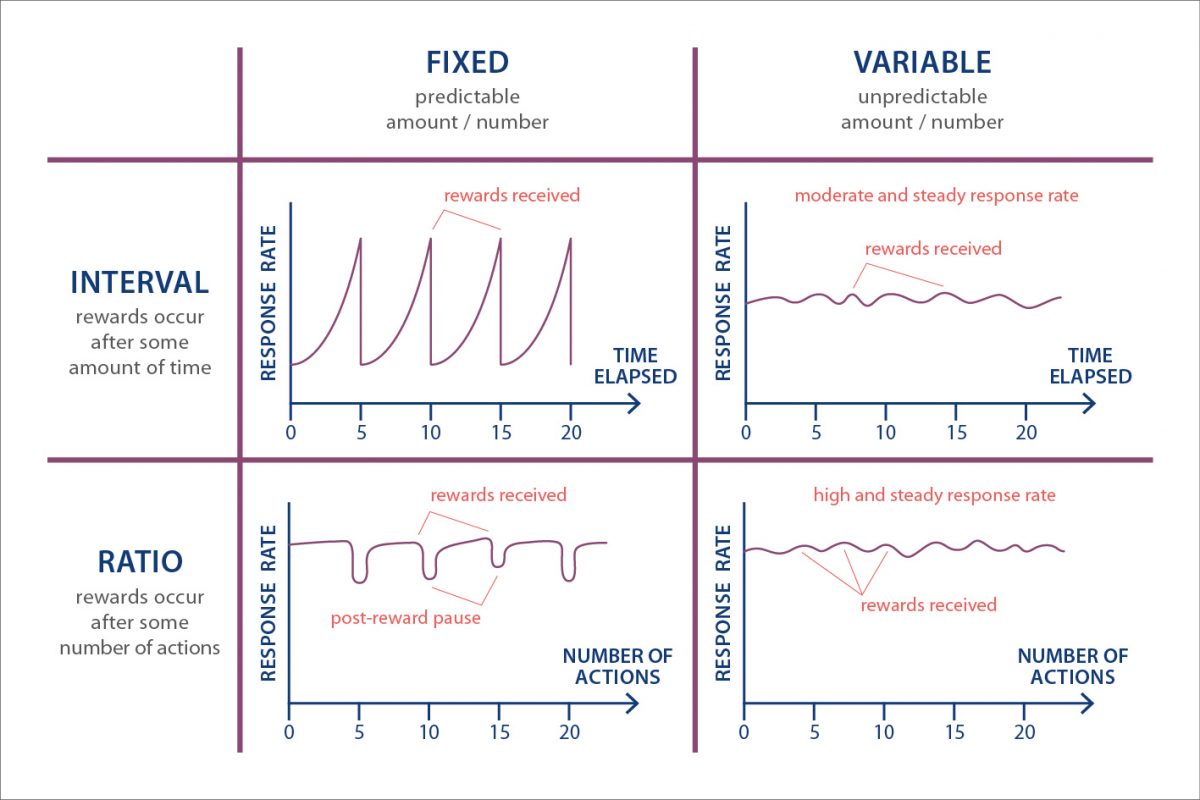

Let’s get back to rewards (I promise you I’m soon circling back to loot boxes, just bear with me a little bit more): what Skinner discovered is that intermittent rewards (when the behavior is sometimes rewarded) led to more engagement with the lever than continuous rewards (when the rat always got a pellet of food each time it pressed the lever). Those rewards can be intermittent based off of time, which we call interval, or based on someone’s action, which we call ratio. And both can be either predictable (fixed) or unpredictable (variable). Here are examples of each in videogames:

- Fixed interval rewards: daily rewards (you can log in every day to get a new reward) or time-based rewards (you know that your building in Clash of Clans will take 10 minutes to build).

- Variable interval rewards: the cherry bonus in Pac-Man that appeared at certain times and was not, as far as I could tell, based on the player’s actions in the game, or the rare spawn in a MMO that will appear in an area at an unpredictable moment.

- Fixed ratio rewards: you need to kill 10 zombies to get an achievement, or you need to buy two skills in a skill tree in order to reach the one you are actually interested in.

- Variable ratio rewards: any reward implying chance. So loot boxes of course, card packs, but also procedurally generated loots on a map (e.g. finding a hidden treasure as we explore the world), and anytime RNG (random number generation) is used (e.g. getting a critical hit when fighting an enemy). Outside of video games, it means any game using some randomness (so… quite a lot), such as all card games and all games using dice.

In sum, there are four types of intermittent rewards and the table below describes their influence on the rat’s engagement with the lever. When rewards are predictable (fixed), there is often a pause after the reward is obtained. We got what we came for or worked a specific amount of time for, so we can take a break. But when rewards are unpredictable (variable), we observe a steadier engagement, because maybe we might get it again if we stick around or continue to open loot boxes. And when the reward is both unpredictable and depending on behavior (variable ratio), the engagement is both high and steady. This is why, since Skinner’s experiments, we absolutely know that variable rewards such as loot boxes are usually more engaging than other rewards. Our level of engagement will depend on how valuable we perceive the reward to be, our odds of gaining it, and many other factors (since, after all, we are not exactly like rats and our brains are a bit more complex). Getting unpredictable rewards are exciting and can be of different kinds: a surprise visit of your best friend, a surprise gift from your loved one, a surprise bonus at work, draw a royal flush, throw double sixes, etc. Here again, variable rewards are not necessarily a bad thing per se. What begins to be highly questionable ethically speaking is when those variable rewards are tied to monetization, because we know that they will have a tendency to engage players to pay more to increase their odds of getting something cool. Thus, loot boxes (and charity bids but these are for a good cause and the feedback is not immediate) are similar to gambling.

That being said, we can decide as a society that, for example, casinos are legal in certain places and only accessible to adults. Humans, after all, still have some free will and it’s not as if variable rewards made a certain behavior absolutely compulsive all the time with everyone. Sure, it might take more willpower to refrain from engaging or stop when a variable reward is at play but most of us adults manage to do so (even if our will fails us from time to time). However, let’s not forget that gambling disorder is recognized as an impulse control disorder in the DSM-5 and that some people do suffer from pathological gambling. This is why gambling laws are quite strict and individuals can ask to be barred from entering casinos when they are compulsive gamblers. No such option exists with videogame loot boxes and since many countries do not recognize loot boxes as gambling, they are often not regulated.

What is much more concerning is the potential impact of loot boxes on children and teenagers, although still not clear today. Like I mentioned in the previous section on addiction, children and teenagers do not have a mature prefrontal cortex, which can make automatic and conditioned responses much harder for them to control when need be. If you were wondering why children are so bad at refraining from doing an action if they didn’t hear “Simon says” first, this is why. This is also why your teenagers have a greater tendency to partake in risky behaviors than adults past 25 years of age (approximately). So loot boxes tied to monetization in a game can potentially, in my opinion, be an issue if children or even teenagers play this game, especially when the game is popular and they feel particularly compelled to buy loot boxes until they obtain a price that is fashionable at school. Peer pressure or even bullying, which could happen yesteryear if a teenager wasn’t wearing the fashionable brands, can happen today with trendy virtual goods. The issue is that game developers cannot easily control who’s playing their games. Unlike casinos, studios cannot easily ID players before letting them access their monetized variable rewards (there are some privacy concerns and it’s easy to cheat when there is an age gate).

But what about physical card packs you might ask? Aren’t they also monetized variable rewards? Indeed they are! Yet most of us grew up with those card packs in our environment and it didn’t seem to be such a big deal. This is true, but mostly because there are many natural obstacles between the initial desire of getting a physical card pack and actually getting it: as a kid you need to ask your parents for money, then if they agree you need to go to the store, those card packs need to be in stock, and lastly you need to give away physical money in exchange for them (money which you could use for something else that also feels rewarding, like a delicious treat at the ice-cream place you walked past on your way to the store). At last! you can open your card pack and see if you received the cards you were seeking. If you didn’t get what you were hoping for and you do not have any money left, well you can only go back home and hope to convince your parents to give you money again. In this fictional scenario, all these steps are forcing the child to be patient, perseverant, and to delay gratification. Those steps do not exist in games where you can pay with a virtual currency, which is often very emotionally disconnected from actual money. The effort to obtain a loot box often boils down to just a click. To be clear, this is just an anecdotal example, not scientifically based, to explain one main difference between physical card packs and loot boxes, but some researchers are looking into their impact beyond the mere absence of physical frictions. For example, it is possible (although not recommended if we are concerned by ethical practices) within a videogame system to decide that players will receive an awesome reward the first time they open a loot box (thus creating an expectation that this could happen again). In physical card packs this cannot be controlled and in casinos this is regulated and monetary rewards are dropped randomly at a certain rate (although they can control the level of fanfare –sound and visual effects– players get as they receive even a small reward, such as 50 cents).

This is why some countries like Belgium are banning loot boxes in games altogether, since they can be considered as gambling –at least on a psychological level– and studios cannot possibly control if underage players are going to access the game and sink both time and money to get those desirable variable rewards.

o What could the game industry do regarding loot boxes?

It is my opinion that avoiding loot boxes in all games that aren’t rated Adults Only or Mature within the ESRB rating system (or 18+ with PEGI) is the most ethical option, given the issues described above. And when loot boxes are used, they should really be completely random (e.g. players can get them at a certain rate that is not manipulated, and this rate should be transparent). Gambling advertising should also be banned in games that children can play. It seems that child gambling has quadrupled in the past 2 years according to the Gambling Commission in Great Britain, in a study conducted on 11-16 year olds. Although it’s hard to have a clear understanding of the impact of such ads on child gambling (correlation does not mean causation), it would be a good ethical practice to protect children from adult content. Of course this isn’t as simple as it seems since game studios often have no visibility on what ads will be displayed in the space they sell to a third party. But raising awareness and pressuring ad companies will probably work.

For adult games, unless their game is about gambling and is regulated as such, game studios might want to severely control secondary marketplaces, and explore more transparent and less enticing monetization techniques. Here again, this is easier to consider for studios that are getting big revenues from a highly popular game. Smaller studios and indie developers will have a hard time cutting down what sometimes is the only way that they have found to stay afloat. It’s worth noting that rewards given randomly have to be perceived as desirable. Throwing dice in the context of maybe winning 1,000 dollars in a casino game won’t have the same impact for players than casually throwing dice at home outside of any context (although clicking on cows seems to be perceived enough of a reward to keep on clicking). Thus, getting a chance to unlock cool characters from the latest Star Wars movie in a popular game will likely feel more rewarding (but also more controlling, which is why it can backfire) than winning a character from an obscure movie no one knows about, in a game that few people play. It’s therefore for the big players to lead by example, which will hopefully set ethical standards for our whole industry.

3. Dark patterns

o Main public concern regarding dark patterns

There is a general concern that tech and videogames “manipulate” us into doing something that we didn’t want to do. This topic overlaps with the concept of attention economy, which I described in the section about addiction, with the idea that tech companies “force” us to engage with a platform longer than we initially intended.

o What science says about dark patterns

A dark pattern describes a design with a purposely deceptive functionality that is not in users’ best interest. But before diving into the topic of dark patterns, let’s play a little game: Imagine that in a store a bat and a ball cost eleven dollars total. Knowing that the bat costs ten dollars more than ball, how much does the ball cost? Try to think about an answer fast. If you replied that the ball costs one dollar, it means that you fell into a common trap. This puzzle was part of the “Cognitive Reflection Test” (CRT) by Shane Frederick (2005) and that included two more questions. Frederick found that 83% of MIT students answered wrongly to at least one question (the correct answer is 50 cents by the way). Although we consider ourselves as logical beings capable of rational thinking, we are actually tricked by the many limitations of our mental processes and unconscious biases in our daily decisions and when solving problems.

In his book Thinking Fast and Slow, PhD in psychology, researcher, and Nobel laureate in economics Daniel Kahneman explains that the human mind has two operating modes, so to speak: System 1 and System 2. System 1 is fast, instinctive, and emotional thinking. System 2 is much slower, deliberate, logical, and involves effortful mental activities such as complex computations. Both systems are active whenever we are awake and they influence one another. Irrational thinking occurs mostly because System 1 operates automatically and is prone to errors of intuitive thoughts and because System 2 might not be aware that these errors are being made.

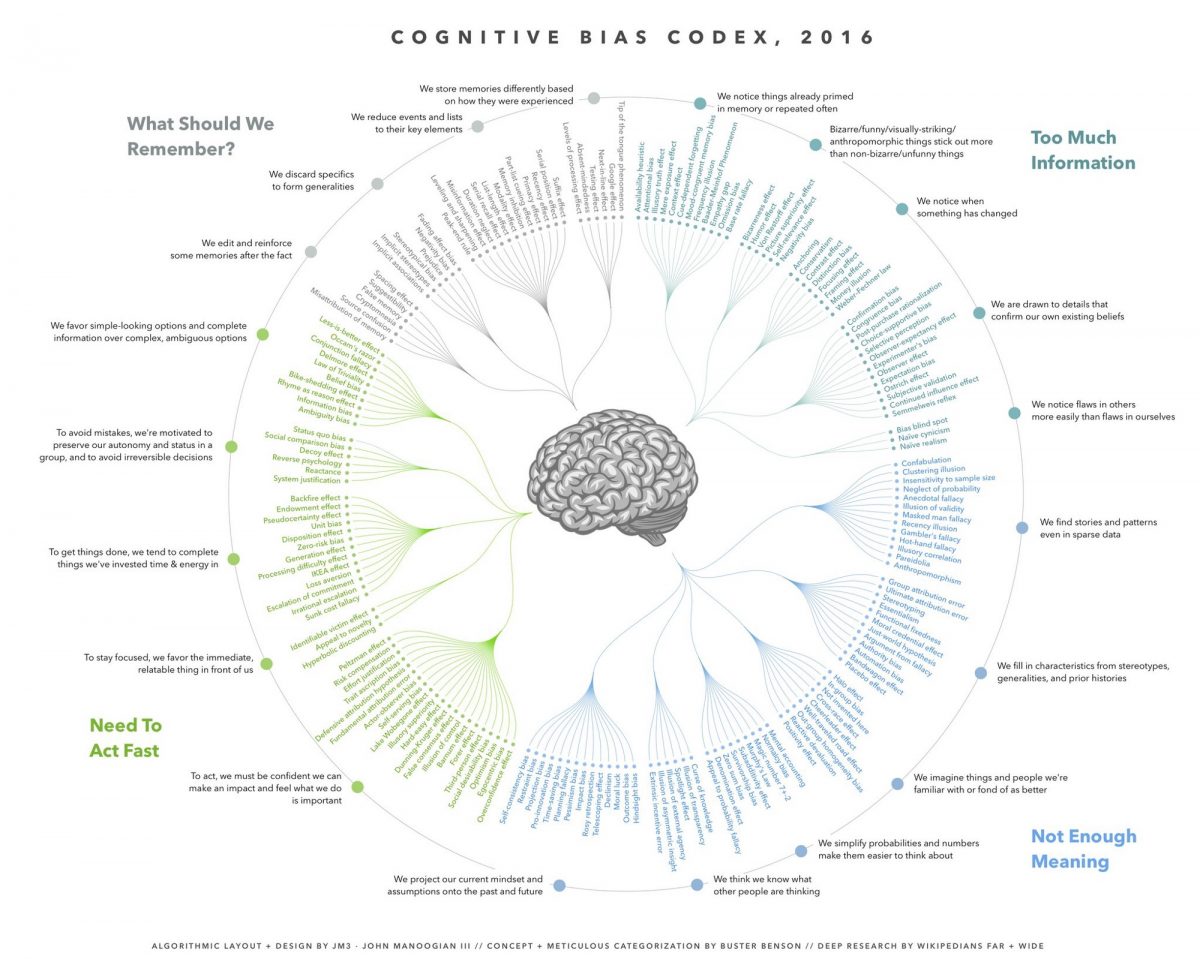

To explain what these cognitive biases are, let me take the more familiar example of optical illusions. In the illusion presented above the two central purple circles have the same size, although we perceive the central circle on the left as being smaller than the one on the right because of their relative size compared to the other circles surrounding them. Well, the same principle applies to cognitive biases. Cognitive biases –or cognitive illusions– are patterns of thought that bias our judgment and our decision making. Just like optical illusions, they are very difficult to avoid even if we are aware of them. Professor of psychology and behavioral economics Dan Ariely in his book Predictably Irrational (2008) went into greater detail on how cognitive biases impact our everyday lives, inducing systematic errors in our reasoning and our financial decision making. For example, “anchoring” is a cognitive bias that is somewhat comparable to the optical illusion presented above: we have a tendency to rely on previous information (the anchor – such as the size of the circles surrounding the purple central circle in the optical illusion) to make a judgment about a new piece of information by comparing them to one another. Marketers use anchoring to influence our decisions. For example: if you see a video game blockbuster on sale for $29 with an initial price of $59 next to it. In that case, the $59 price tag represents the anchor (emphasized by the strikethrough effect applied to it) to which you compare the current price. This makes you recognize that it is a good deal and it might sway you into buying this title over another one of the same price of $29 but that is not on sale. Even though the cost is the same, the game that is not on sale might seem less interesting because it doesn’t make you feel that you are saving money ($30) compared to the game on sale. Similarly, the same price of $29 can appear less of a good deal if there are other blockbusters on sale sold at $19, therefore representing an even better deal. Maybe you will end up buying a game that you were less interested in just because it offered a better deal, or maybe you will buy more games instead of the one(s) you were initially interested in, because you just cannot miss this compelling opportunity to make such a good transaction. In the end, you might even spend much more money than your video game budget allows you to buy. We tend to compare things to one another to make decisions and it influences our judgment. The worst part is that we are mostly unaware of being influenced by these biases. I’m not going to list them all here since there are too many but the product manager Buster Benson and the engineer John Manoogian created the chart shown below in an effort to sort and categorize the cognitive biases listed on the dedicated Wikipedia page.

User experience (UX) is a mindset that allows creators such as game developers to offer a better experience to their players by taking into account human brain limitations and cognitive biases. When considering UX, we place players first and ensure that they will have an engaging and usable experience, which also implies consideration of inclusion, accessibility, and protection against antisocial behaviors that can occur in multiplayer games. Dark patterns occur when, instead of placing players at the center of the creation process, companies place their business goals at the center, and when brain limitations and implicit biases are exploited to make more money at the expense of players. The difference between UX and dark patterns is similar to the difference between a magician manipulating our brain limitations to entertain us, and the pickpocket that does so to steal your smartphone or wallet surreptitiously. Psychology researchers have been studying how certain marketing tricks work. Let’s take the example described by Dan Ariely in the aforementioned book (2008) regarding a subscription offer for a magazine as seen on its website. Three options were proposed:

- One-year subscription to the online version, for $59.

- One-year subscription to the print edition, for $125.

- One-year subscription to both online and print versions, for $125.

Options B and C have the same price ($125) although option C has clearly more value than B. Intrigued by this offer, Ariely ran a test on 100 students from MIT to see which option they would pick. Most students chose option C (84 students), 16 students chose option A and none of them chose option B, which therefore seems a useless option; who would want to pay the same price for less value anyway? So Ariely removed option B and ran the test again using new participants. This time, most students chose option A (68 students) while 32 students chose option C. People’s behavior can be influenced by the environment, and this specific case is called the “decoy effect”, whereby a distracting (decoy) option (B) makes one other option (C) seem more attractive than it would be without the presence of the decoy. We could therefore argue that the decoy effect very often use in free-to-play videogame stores (for example gem package offers), but also use by many other industries and businesses, is an example of a dark pattern because it’s influencing people to buy a more expensive option than most of them would have if no decoy was present.

Some situations can clearly be labeled as dark patterns (to increase the company’s revenue at the expense of users) while others can be defined as a “nudge” (benevolent, such as the beep from your car nudging you to fasten your seatbelt). On the other hand, “good UX” is when users can accomplish what they want with a system without unnecessary frictions (such as confusion or frustration), while “bad UX” (or “UX fail”) is when users encounter issues and frictions that are not by design. Here is a clear example of a dark pattern from Amazon: Let’s say you placed a few items in your cart and are ready to check out, so you click on the “check out” button, which leads you to the screen below. Given that human attention is scarce, we have a tendency to not read the fine prints. This is why in UX we make sure the calls to action are clear and visible. But what is happening here is, if you click on the big orange button inviting you to “Get Started”, thinking that you are getting started in checking out, you actually sign up for Amazon Prime. On this screen, if you just want to check out without signing up for Amazon Prime, you need to click on the small link on the bottom left, which is shaming you on top of it because “you won’t save $18.95 on this order, you fool,” while you don’t have any information on how much Amazon Prime subscription costs. I’m guessing that a lot of people enrolled to Amazon Prime unwillingly, and then maybe kept their subscription because it’s quite a hassle to unsubscribe. If you are interested in dark patterns, you can take a look at the website darkpatterns.org which shows a lot of examples (to avoid, of course).

UX is not about tricking users into doing something that is arguably detrimental to them while being clearly beneficial to the business. In fact, most dark patterns violate one or more of the ten usability heuristics put together by Jakob Nielsen. The Amazon example violates the “visibility of system status” heuristic, for example; users cannot easily tell what clicking on the “Get Started” button will lead to, which means that the status of the system is not transparent. The issue regarding dark patterns is that, in most cases, they aren’t as clear-cut. For example, look below at an old screenshot from World of Warcraft. If they want to delete a character in WoW, players not only get a confirmation screen but they have to type in ‘DELETE’. Is this a dark pattern to make people think twice before deleting a character? It’s clearly not beneficial for Blizzard when players delete characters, as maybe it means that they are done with the game. So, are they adding additional steps to make it harder to delete a character, just like Amazon makes it hard to unsubscribe to Amazon Prime? Well, in the case of WoW, I would actually argue that it’s just good UX. One of the usability heuristics is “error prevention”. To prevent users from making actions that could have a negative impact for them (e.g. closing a software after having been working for two hours in it without saving first), it is usually a good practice to increase the “physical load” (i.e. adding a click in the process) via a confirmation screen to ensure that this is really what they wanted to do. The confirmation screen in WoW where players have to type in ‘DELETE’ is good UX: players can spend hours, weeks, months, or even years developing a character, it’s thus important to add extra steps to avoid accidental deletion. Of course, if the character had a sad face or was crying on this screen as the player is about to get rid of it, then we could argue that the emotional guilt trip would make it a dark pattern.

o What could the game industry do regarding dark patterns?

As Thaler and Sustein say in their book Nudge, “there is no such thing as ‘neutral’ design”. Every design, system implementation, art direction, or choice offered manipulates users one way or another. It’s understandable that parents fear their kids might be manipulated by videogames, but manipulation is everywhere. Magicians manipulate our attention to entertain us, our car manipulates us into fastening our seatbelts or get gas, our microwave manipulates us into getting out our food once the program we started has ended, an artist manipulates our emotions with their art, journalists manipulate us by telling a story and presenting information in a certain way (as I’m currently doing), and so on. There is no question that videogames manipulate players, rather the question is whether this manipulation is detrimental to players. Are videogames exploiting our brain limitations for the sole purpose of making benefits at the expense of players’ well being? This is the real question that we, as an industry, need to address.

Manipulation can be benevolent (e.g. designing a mug with a handle to influence users to grab it by the handle and thus lift it without burning themselves when it contains a hot beverage, aka “good UX” or “nudge” when it’s for the user’s own good or for the greater good), careless (e.g. a mug designed without a handle because it was deemed more aesthetically pleasing, with no consideration of usability or user’s health, aka “bad UX”), or malevolent (e.g. in a pharmacy, offering a hot beverage in a badly designed mug with the intention to sell a soothing cream to the burnt customers afterwards, aka “dark pattern”). It’s not always clear if a design is a dark pattern or not: it depends on the context and mostly on the intention behind. We could argue that the environment humans live in, for the most part, is designed in such a way that it discriminates against disabled people, underrepresented minorities, or women (see the excellent book Invisible Women by Caroline Criado Perez for many examples of the latter). In some cases, it’s intentional (e.g. making it more difficult to people of color to register to vote), but in most cases it’s a careless oversight due to implicit biases and blind spots that people who are shaping our world and technology (mostly white abled-bodied cis heterosexual men) necessarily have, like the rest of us. Which does not mean it should not be fixed even if it’s not intentional, but it’s a different problematic (hence the popularity of unconscious bias trainings and the importance of accessibility awareness).

When companies place their business and revenues first without considering all users’ well being, then they are going to lean more towards dark pattern practices. Companies who think about users first, in a win-win mindset (beneficial to both users and the business), then they are leaning towards a UX strategy and culture. This is why we need to think about ethics and define, at the studio level and the industry level, what is the line that we should not cross. Making games is a hard endeavor: most free-to-play games are barely surviving while gamers are increasingly demanding. If the game doesn’t provide new content at a regular pace, players get bored and move on to another game, which could mean the end of the project or even the studio (see for example the demise of Telltale). As a result, many studios often copy a business model (e.g. seasons) or feature (e.g. loot boxes) that seemed to work well in a certain game to keep it afloat, without asking themselves if it’s ethical or not. Most unethical practices are (I hope!) in the careless manipulation bucket (bad UX) rather than full on dark patterns (i.e. meant to intentionally deceive or harm users for business benefits), which means we need to better understand the impact of our decisions on our players, especially on the youngest ones. Here are a few examples for your consideration.

– Guilt tripping. For example, when users avoid choosing a paid option or a more expensive one (e.g. a character that cries if players do not buy it, or do not buy gems to keep playing after losing all their lives).

– Capitalizing on loss aversion. For example, letting players invest time in a character or environment for free first, then asking them to pay otherwise they will lose all of their progression.

– Capitalizing on the fear of missing out (FoMO). If players are not in the game at a certain time, or do not play enough, they will miss out on something (such as a desirable loot) that is available only for a limited amount of time. For example, if the players want to obtain a very desirable item (character, skin, emote, etc.) they have to play everyday for the duration of a season in order to obtain it before it’s gone forever. Another example would be daily chests with a cumulative bonus (i.e. if you log in cumulative days, you gain a better reward).

– Insidious pay to win. For example, players cannot fully be competent in the game unless they pay to obtain an otherwise very hard item to obtain and that has gameplay value (unlike cosmetic items), and it’s not clearly communicated to them.

– Pay to remove friction. For example, a desirable gameplay item is taking a long time to obtain, unless player pays to get it sooner, or back in the day, add another coin to the arcade game to keep playing and overcome the “game over”.

– Social obligation. For example, telling players that if they do not join a mission or play at a certain cadence, they will let their teammates down.

Many of these tactics are used by free-to-play games (and increasingly by paid-upfront games), and were refined in other industries long before arriving in the videogame industry (and even long before researchers tried to understand why they were so compelling). They are widely used in our society. And in certain cases, these techniques can even foster good UX. For example, putting an emphasis on social obligation in team-based games like MOBAs (multiplayer online battle arena) is important to avoid anti-social behaviors (such as leaving the game before a match is over because you feel that your team is not going to win, thereby effectively letting down everyone else on the team). Like mentioned above, it’s not the design by itself that can be deemed a dark pattern or not. We need to consider the intention behind the design, and the context in which it takes part. When these techniques are directly tied to monetization, this is when we can argue that they are ethically debatable. And if these games are targeted to children or teenagers I would argue that it’s more clearly unethical given that, as I mentioned earlier, this population does not have a mature prefrontal cortex which makes it harder for them to resist temptation. And in the case of games that were initially targeted to adults but are rated T for Teens or E for Everyone, and it turns out that many children are playing it, then a reassessment of the monetization technique is necessary if the company cares about ethics.

As a general rule of thumb I would recommend companies to never punish disengagement in all their games. For example, there is an important difference between a daily reward design which allows players to get a very desirable item if they log in, say, 15 non-cumulative days in a 30-day season, and one that forces players to log in every single day to obtain such item. No design is ever neutral. Placing the humans at the center of what we are creating is thus critical when considering ethics. This is why UX practitioners, while not solely responsible for being the guardians of ethical practices, can raise awareness and point out possible unethical practices when they spot them, in the same way that they are advocating for accessibility, inclusion, and fair play in games. Since UX should be the concern of everyone, and that a UX strategy and culture cannot be accomplished without having the top management on board, UX practitioners need support, and game studios should define their values and consider having an ethical committee, preferably open to public scrutiny.

4. Violence

o Main public concern regarding violence in games

There is a common concern that violent videogames can make players more aggressive in real life.

o What science says about violent videogames

Despite the numerous studies on the impact of violent videogames in the past fifteen years, there is no evidence that violent videogames cause players to commit acts of violence. In fact, videogames were found not to be associated with aggressive behavior in teenagers (not even among “vulnerable” populations). I will therefore not waste anymore of your time and mine with this aspect. Instead, I would like to discuss the ethics of certain topics or contents portrayed in videogames. Violent video games may not be associated with real-life aggression, but that does not mean their content is not problematic. I mentioned earlier that the human brain has two “operating modes”, System 1 (automatic and effortless) and System 2 (slow and effortful). System 1, while very useful to accomplish the countless tasks and decisions we need have to go through our days, is also heavily biased. And these biases are implicit (unconscious, so to speak). When our implicit biases go unchecked, we can make irrational decisions for ourselves (see the example of the ultimatum game in game theory), or perpetuate discriminations with others. For example, in the context of recruitment, women are discriminated against as compared to men even though resumes are exactly the same (see Harvard Business Review‘s many articles on the topic of gender discrimination). Keep in mind this topic is also complicated and nuanced and that some studies are discussing the often unclear impact on implicit biases in perpetuating discrimination. That being said, here’s another example: the Doll Test. In the 1940s, PhD in psychology Mamie and Kenneth Clark explored the impact of segregation on African-American children. They demonstrated that black children deemed dark-skinned dolls more “bad” and white-skinned dolls looking “more like them”. The worst part in this story is that a similar impact is still found in the 21st century. This is one of the reasons why it is so important to have diversity in our society and cultural products. To be clear, the science of this aspect is not clear at all, this is why I will move on to discussing the ethical aspects of videogame content.

o What could the game industry do regarding violence?

I hope that you will agree that women are not biologically less competent than men, and that having a white skin does not biologically lead someone to being better. Thus we need to explore the origin of these biases in our culture and society. Our culture has an impact on the stereotypes and overall implicit biases reinforced and perpetuated, although we do not currently understand this impact well. However, if you consistently witness women portrayed as being less competent than men, you might implicitly integrate this harmful stereotype as being generally true. This is how classical (Pavlov) conditioning works: if repeated enough times, we can learn to associate two stimuli (e.g. ‘being a woman’ and ‘not being good at math’). In a similar way that if you constantly see in advertisements and movies that toothpaste is spread on the entire length of the toothbrush, you might integrate that this is the expected and correct behavior to have (it’s not, using a pea-sized amount of toothpaste is what is actually recommended). Of course, how we are supposed to behave with women, people of color, or disabled people is nowhere near comparable to our behavior with toothpaste. My point is that we are constantly conditioned, influenced, manipulated by our environment and culture; sometimes in good ways, sometimes in harmful ways. And videogames represent today a big market share in our entertainment consumption. Although any given game doesn’t by itself have an impact on, say, how women perceive their body, it is hard to argue against the fact that women are objectified overall in our culture, which in turn has necessarily some sort of impact on the implicit biases reinforced in the population. It is therefore my contention that videogames, being part of our culture, have some level of responsibility to consider.

I will reiterate here that no design is neutral. A game necessarily encourages some behaviors (i.e. rewards, or doesn’t punish), while it discourages other behaviors (punishes, or doesn’t reward). Game developers use this knowledge to help players navigate the game and overall have fun. However, some behaviors that aren’t desired or by design, such as antisocial behaviors, can sometimes happen in games when they were not anticipated or controlled for. This is why multiplayer games have codes of conduct and punish players who behaved in a harmful way against other players (e.g. harassing them) by banning them for a certain amount of time, for example. And this is why an organization like the Fair Play Alliance exists to promote safe game environments and help game developers protect their players from anti-social behaviors.

Game studios should monitor what behaviors they are encouraging and discouraging in their games, at the very least to ensure that all players can have fun while being safe within the game and on their community management platforms. Moreover, game developers need to reflect on what they are normalizing (or worse, glorifying) in the name of fun. As Miguel Sicart puts it in his book The Ethics of Computer Games, “games force behaviors by rules”. My point here is not within a virtue ethics perspective that would define playing games with immoral actions content (such as killing virtual people) as being an unethical activity. Rather, my contention is aligned with Sicart in considering the player as a moral agent who can make choices within the game environment. For example, players can choose to drive over and kill pedestrians in GTA V, while this is not a choice that players of Driver: San Francisco have (pedestrians in the latter game always end up avoiding cars). So-called “sandbox games” surely offer players more agency than scripted games, yet players’ agency is still limited with what was planned by game designers. For example, players in GTA: Vice City can choose to have sex with a prostitute to gain extra health, and can decide to kill the prostitute afterwards to regain their money. Within the system of the game and given its virtual aspect, this action is not necessarily immoral, and gamers will argue that this is a good way to maximize in-game benefits while it minimizes in-game costs (behavior usually called “min-maxing”). However, the game does not offer another way to min-max health in the prostitute example. The game system doesn’t allow players to make friends or fall in love with the prostitute, which could in turn diminish the cost of getting increased health. Therefore the “sandbox” aspect of the game is still limited by what behaviors are allowed, rewarded, and punished by game designers. When unethical actions are rewarded in a game while their moral opposites are not even a possibility (for example, both ethical and unethical actions are possible in the game Black & White), we can argue that these games have an unethical content.

Arguing that some games have unethical content is not the same as saying that these games have a real-life immoral impact on society. The problem is that researching such impact is very difficult, if at all possible, given that we cannot in an experiment isolate players from the rest of society to measure the sole impact of specific games on their beliefs and real-life behaviors. However, whether condoning certain harmful behaviors has a negative impact on society (by virtue of conditioning) or not, we should still consider the ethics of our contents. Am I arguing that playing a videogame with unethical content will lead to real-life harmful behaviors? No. My contention is that videogames can participate in condoning or glorifying certain harmful behaviors, such as antisocial behaviors or harassment (or worse) against women or minorities.

If we believe that videogames can be a force for good, then we cannot shy away from discussing if they can also be harmful. In the preface of his book Persuasive Games, Ian Bogost says “In addition to becoming instrumental tools for institutional goals, videogames can also disrupt and change fundamentals attitudes and beliefs about the world, leading to potentially significant social change.” We cannot celebrate videogames bringing a positive impact to society, like at the Games for Change Festival every year, without considering the negative impact they might also have. Therefore, we should:

• Be held accountable for the content in our games. Consider if your game is perpetuating discrimination or, worse, glorifying harmful behaviors.

• Protect players from antisocial behaviors (see Fair Play Alliance).

• Be conscious that people with bad intentions might use our games and social platforms to harm others.

If you’re interested in the topic, you can for example look at the Human rights guidelines for online games providers, developed by the Council of Europe in co-operation with the Interactive Software Federation of Europe (although I would argue that it needs a better definition of “harmful behaviors” and “excessive use of online games”, as well as a scientific approach to reassess certain claims). You can also explore the website Humane By Design created by designer Jon Yablonski.

Conclusion

Videogames are increasingly popular. It is thus becoming imperative that game developers and studios spend more time and effort in reflecting on the responsibilities and moral duties of the game industry. As Miguel Sicart says, “[Game developers] should not just be told that they are morally accountable, but also understand why and how they are morally accountable.” This is why I humbly gave my cognitive science perspective on this topic, otherwise tackled by others with different angles, to help advance ethics in videogames. My point was also to debunk the many misconceptions and myths around videogames and the brain, that are not only distracting the public and potentially creating moral panics, but also slowing down ethical considerations by game studios that are more likely to instead be defensive in reaction to the numerous negative impacts they are accused of. And while the public is focused on the potential negative impact of videogames, people forget that games can promote emotional resilience, can have positive effects on many different aspects of our behavior (such as vision, attention, or mental rotation), be educational, or be used for rehabilitation. While critical to consider when scrutinizing the potential negative impact of videogames, we cannot as an industry respond to criticism only by emphasizing the benefits of playing games. We must also face those criticisms, debunk the pseudoscience, and understand the truth behind the public concern so we cannot address the real issues. Maybe PEGI and ESRB ratings could evolve to further inform parents, and parental control could be made easier and more efficient by understanding the psychology behind problematic gaming. Lastly, it might be time for our industry to openly and seriously reflect on ethics, and maybe even elaborate an ethics code we could all pledge to follow, just like some major game companies (sadly not enough) pledged to take action on the climate crisis.

About the Author: Celia Hodent, PhD is an expert in video games UX (user experience) and cognitive psychology. She is a speaker, writer, and acclaimed author of The Gamer’s Brain: How Neuroscience and UX can Impact Video Game Design.

More information about Celia can be found here.

Many thanks to Fran Blumberg, Séverine Erhel, Yann Leroux, Chris Ferguson, and Mark Humphries for their precious feedback on this piece.

Do you have a video games UX or other project you’d like to discuss with me?

Get in touch via Twitter or Linkedin

— or fill out this form.

Pingback: Commentaire sur l’article de Célia HODENT février 2020 – Addiction & Réflexions